Node.js Performance #1: "for...of" vs forEach()

Understanding the Performance Differences Between 'for...of' and 'forEach' in Node.js

Photo by Markus Spiske on Unsplash

Intro

At some point, you've likely encountered the notion that JavaScript is slow. It's a common refrain, often used to "explain" performance issues in production code. However, it's essential to recognize that this perception isn't always accurate. While JavaScript's performance can indeed pose challenges under certain circumstances, it's also a highly versatile language capable of achieving impressive speeds when optimized effectively. Therefore, while acknowledging the occasional slowdowns that may arise, it's equally important not to dismiss JavaScript solely on the basis of this well-worn stereotype.

In this series of articles, we'll talk about how JavaScript can be fast enough and why developers often slow down JavaScript (in most cases, even implicitly).

Today, we'll start with a very common routine – array iteration.

Brief History

Long ago, it was common to use the imperative style for array iteration processes, which required finding the array's length, defining a for loop with an index variable, and so on. For example, the sum of an array in C would look like this:

int arraySum(int arr[]) {

int length = sizeof(array) / sizeof(array[0]);

int sum = 0;

// Iterate through the array and add each element to the sum

for (int i = 0; i < length; i++) {

sum += arr[i];

}

return sum;

}

Of course, we're talking about JavaScript and in JavaScript it would be a bit simpler:

function arraySum(arr) {

var sum = 0;

// Iterate through the array and add each element to the sum

for (var i = 0; i < arr.length; i++) {

sum += arr[i];

}

return sum;

}

Looks great, doesn't it? But all of you who started a long time ago with frontend development remember that such "loops can block the browser event loop and this could cause issues with user interactions like button clicks, jQuery effects, etc." To fix the issue, it was recommended to use the functional approach (I use the latest version with the native forEach method):

function arraySum(arr) {

var sum = 0;

// Iterate through the array with forEach method

arr.forEach(n => {

sum += arr[i];

})

return sum;

}

Looks like that is our silver bullet; it should fix all our problems with loops forever. Moreover, it's more elegant and has improved readability. We quickly got used to this approach. But it's not that obvious...

Performance

Since JavaScript expanded to the backend side (Node.JS), we began using the microservice architecture more frequently. Our applications required increasing computational resources, leading us to scale horizontally. Eventually, even our simple backend consisted of a few microservices that ran on platforms like Kubernetes in the cloud provider, and could automatically scale in relation to client load.

With the increasing number of abstractions, we began to lose sight of how the code we write actually runs on the CPU. So, let's prepare for a deep dive.

Defining functions

Let's continue with our array summing function. So, let's examine two different implementations: functional and imperative.

The functional one:

// Sum in functional approach

function sumFunctional(arr) {

let sum = 0;

arr.forEach((n) => {

sum += n;

});

return sum;

}

And the imperative one:

// Sum in imperative approach

function sumImperative(arr) {

let sum = 0;

for (const n of arr) {

sum += n;

}

return sum;

}

From an algorithmic analysis perspective, they both have linear time complexity. But what about the actual execution time?

Benchmarking

So, let's benchmark our functions and see which one is faster. Since they look similar, what could go wrong?

How will we benchmark them? The idea is as follows:

Generate an array with randomly generated numbers;

Do some warmup: run a couple of dozen loops to give the JS engine time to compile and optimize the methods;

Run the benchmark: again, run a couple of dozen loops with the method calls, but now with time measurement;

Check the results.

The source code for the benchmarking can be found here.

Results

I used 100 warm-up cycles, then 20 experimental cycles with time measurement. Different array lengths were used for more timing data. The results are as follows:

| Array length | sumFunctional(), ms | sumImperative(), ms |

| 1_000 | 0.1732520000000477 | 0.04095899999970243 |

| 10_000 | 1.8093760000001566 | 0.384876000000304 |

| 100_000 | 17.51308599999993 | 4.250372999999854 |

| 1_000_000 | 187.16633200000024 | 40.233208999998624 |

As you can see, the difference between sumImperative and sumFunctional is ~4 times.

This is significant for such a small change. That means if you have frequent computations with long arrays, you should definitely use the imperative approach to improve performance. If you have scaled microservices on the backend, it will cost almost nothing to "block" the main event-loop a little bit more doing such loops.

But you might say, "Come on, Node.js has Google's V8 JS engine under the hood, it's super fast and super cool, it optimises everything, doesn't it?"

Ok, let's dive deeper :)

Deep dive into byte code

Yes, Node.js uses V8 as the JavaScript engine. V8 is one of the fastest, if not the fastest, JavaScript engines. Let's find out what happens when we run our code.

V8 architecture

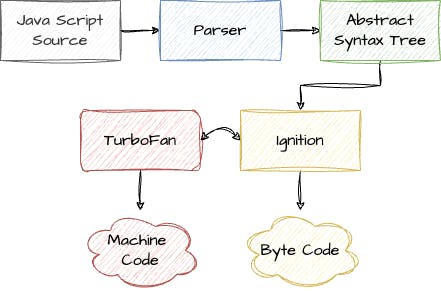

I won't delve too deeply here, as it would require another lengthy article, but in general, V8 has the following architecture:

First, V8 parses JS code into an Abstract Syntax Tree (AST). Ignition then converts the AST into byte code, applying initial optimizations. Turbofan then converts the byte code into machine code and executes it. It can also perform optimizations and de-optimizations during the code execution process, or provide Ignition with feedback about the byte code (we'll explore these feedback vectors later in the byte code).

Now, we understand the main JS to machine code conversion pipeline in Google V8 in general. Let's examine how our two JS functions were converted into byte code and where the performance difference lies.

sumImperative() byte code

To convert JS code to V8 byte code, the following command can be used:

$ node --print-bytecode --print-bytecode-filter=<method name> <file>

So, let's take a look at what Ignition provided us:

[generated bytecode for function: sumImperative (0x14c0a279d649 <SharedFunctionInfo sumImperative>)]

Bytecode length: 126

Parameter count 2

Register count 13

Frame size 104

Bytecode age: 0

716 S> 0x14c0a279fbf6 @ 0 : 0c LdaZero

0x14c0a279fbf7 @ 1 : c4 Star0

738 S> 0x14c0a279fbf8 @ 2 : b1 03 00 02 GetIterator a0, [0], [2]

0x14c0a279fbfc @ 6 : bf Star5

0x14c0a279fbfd @ 7 : 2d f5 00 04 GetNamedProperty r5, [0], [4]

0x14c0a279fc01 @ 11 : c0 Star4

0x14c0a279fc02 @ 12 : 12 LdaFalse

0x14c0a279fc03 @ 13 : be Star6

0x14c0a279fc04 @ 14 : 19 ff f1 Mov <context>, r9

0x14c0a279fc07 @ 17 : 11 LdaTrue

0x14c0a279fc08 @ 18 : be Star6

733 S> 0x14c0a279fc09 @ 19 : 5d f6 f5 06 CallProperty0 r4, r5, [6]

0x14c0a279fc0d @ 23 : ba Star10

0x14c0a279fc0e @ 24 : 9f 07 JumpIfJSReceiver [7] (0x14c0a279fc15 @ 31)

0x14c0a279fc10 @ 26 : 65 c6 00 f0 01 CallRuntime [ThrowIteratorResultNotAnObject], r10-r10

0x14c0a279fc15 @ 31 : 2d f0 01 08 GetNamedProperty r10, [1], [8]

0x14c0a279fc19 @ 35 : 96 1c JumpIfToBooleanTrue [28] (0x14c0a279fc35 @ 63)

0x14c0a279fc1b @ 37 : 2d f0 02 0a GetNamedProperty r10, [2], [10]

0x14c0a279fc1f @ 41 : ba Star10

0x14c0a279fc20 @ 42 : 12 LdaFalse

0x14c0a279fc21 @ 43 : be Star6

0x14c0a279fc22 @ 44 : 19 f0 f9 Mov r10, r1

733 S> 0x14c0a279fc25 @ 47 : 19 f9 f7 Mov r1, r3

749 S> 0x14c0a279fc28 @ 50 : 0b f9 Ldar r1

756 E> 0x14c0a279fc2a @ 52 : 38 fa 0c Add r0, [12]

0x14c0a279fc2d @ 55 : 19 fa f0 Mov r0, r10

0x14c0a279fc30 @ 58 : c4 Star0

722 E> 0x14c0a279fc31 @ 59 : 89 2a 00 0d JumpLoop [42], [0], [13] (0x14c0a279fc07 @ 17)

0x14c0a279fc35 @ 63 : 0d ff LdaSmi [-1]

0x14c0a279fc37 @ 65 : bc Star8

0x14c0a279fc38 @ 66 : bd Star7

0x14c0a279fc39 @ 67 : 8a 05 Jump [5] (0x14c0a279fc3e @ 72)

0x14c0a279fc3b @ 69 : bc Star8

0x14c0a279fc3c @ 70 : 0c LdaZero

0x14c0a279fc3d @ 71 : bd Star7

0x14c0a279fc3e @ 72 : 10 LdaTheHole

0x14c0a279fc3f @ 73 : a6 SetPendingMessage

0x14c0a279fc40 @ 74 : bb Star9

0x14c0a279fc41 @ 75 : 0b f4 Ldar r6

0x14c0a279fc43 @ 77 : 96 23 JumpIfToBooleanTrue [35] (0x14c0a279fc66 @ 112)

0x14c0a279fc45 @ 79 : 19 ff f0 Mov <context>, r10

0x14c0a279fc48 @ 82 : 2d f5 03 0e GetNamedProperty r5, [3], [14]

0x14c0a279fc4c @ 86 : 9e 1a JumpIfUndefinedOrNull [26] (0x14c0a279fc66 @ 112)

0x14c0a279fc4e @ 88 : b9 Star11

0x14c0a279fc4f @ 89 : 5d ef f5 10 CallProperty0 r11, r5, [16]

0x14c0a279fc53 @ 93 : 9f 13 JumpIfJSReceiver [19] (0x14c0a279fc66 @ 112)

0x14c0a279fc55 @ 95 : b8 Star12

0x14c0a279fc56 @ 96 : 65 c6 00 ee 01 CallRuntime [ThrowIteratorResultNotAnObject], r12-r12

0x14c0a279fc5b @ 101 : 8a 0b Jump [11] (0x14c0a279fc66 @ 112)

0x14c0a279fc5d @ 103 : ba Star10

0x14c0a279fc5e @ 104 : 0c LdaZero

0x14c0a279fc5f @ 105 : 1c f3 TestReferenceEqual r7

0x14c0a279fc61 @ 107 : 98 05 JumpIfTrue [5] (0x14c0a279fc66 @ 112)

0x14c0a279fc63 @ 109 : 0b f0 Ldar r10

0x14c0a279fc65 @ 111 : a8 ReThrow

0x14c0a279fc66 @ 112 : 0b f1 Ldar r9

0x14c0a279fc68 @ 114 : a6 SetPendingMessage

0x14c0a279fc69 @ 115 : 0c LdaZero

0x14c0a279fc6a @ 116 : 1c f3 TestReferenceEqual r7

0x14c0a279fc6c @ 118 : 99 05 JumpIfFalse [5] (0x14c0a279fc71 @ 123)

0x14c0a279fc6e @ 120 : 0b f2 Ldar r8

0x14c0a279fc70 @ 122 : a8 ReThrow

766 S> 0x14c0a279fc71 @ 123 : 0b fa Ldar r0

777 S> 0x14c0a279fc73 @ 125 : a9 Return

Constant pool (size = 4)

0x14c0a279fb91: [FixedArray] in OldSpace

- map: 0x2c7d20b40211 <Map(FIXED_ARRAY_TYPE)>

- length: 4

0: 0x2c7d20b49221 <String[4]: #next>

1: 0x2c7d20b45f41 <String[4]: #done>

2: 0x2c7d20b44819 <String[5]: #value>

3: 0x2c7d20b470b1 <String[6]: #return>

Handler Table (size = 32)

from to hdlr (prediction, data)

( 17, 63) -> 69 (prediction=0, data=9)

( 82, 101) -> 103 (prediction=0, data=10)

Source Position Table (size = 24)

0x14c0a279fc79 <ByteArray[24]>

It may look confusing and even intimidating, but let's proceed. By the end of the article, it will have become more user-friendly :)

So, let's skip the header and the footer (they contain metadata) and focus on the byte code:

0x14c0a279fbf6 @ 0 : 0c LdaZero

The first part (0x14c0a279fbf6) is the absolute address in memory. Each time you run the program, this will be different, but for now, we just need to know that this is the memory address of the line of code. Next, skipping the '@', we see 0. This is the relative address of the line of code, which always starts from 0. This address will be very useful when we are dealing with jumps. After the colon, we see 0c, the hexadecimal representation of the command, usually called an opcode. The final thing is the command itself – LdaZero. Each command can take one or more bytes.

So now, we know how to read lines with byte codes. Let's try to understand our logic.

Firstly, let's identify when we assign our sum variable:

0x14c0a279fbf6 @ 0 : 0c LdaZero

0x14c0a279fbf7 @ 1 : c4 Star0

Here, the LdaZero command loads 0 into the accumulator, and then Star0 stores the accumulator in the r0 register. So, what are the accumulator and r0 register?

Ignition uses registers like r0, r1, r2 ... to store byte code inputs or outputs and specifies which ones to use. They're like global variables. In addition to input registers, Ignition also has an accumulator register, which stores the results of operations. Let's treat the accumulator like a special variable that always stores the result.

So, we initialized the r0 register as our sum variable. Let's move on.

Since the for ... of operator works with iterable objects, there should be next/done/return methods somewhere around.

0x14c0a279fbf8 @ 2 : b1 03 00 02 GetIterator a0, [0], [2]

0x14c0a279fbfc @ 6 : bf Star5

0x14c0a279fbfd @ 7 : 2d f5 00 04 GetNamedProperty r5, [0], [4]

0x14c0a279fc01 @ 11 : c0 Star4

0x14c0a279fc02 @ 12 : 12 LdaFalse

0x14c0a279fc03 @ 13 : be Star6

0x14c0a279fc04 @ 14 : 19 ff f1 Mov <context>, r9

The GetIterator command retrieves the object[Symbol.iterator] method from a0, calls it, and stores the result in the accumulator. a0 is essentially the first argument of the function. If we had a second argument, it would be a1, and so on. Since GetIterator has only one argument, [0] and [2] are so-called "feedback vectors". This mechanism is used for code optimizations and providing feedback between Ignition and TurboFan. We'll skip them for now.

As usual, the result of the command is stored in the accumulator, so we should store it somewhere. Star5 stores the accumulator value in the r5 register.

Next, GetNamedProperty is called on r5 with the [0] argument. [0] is an index from the constant pool. If you take a look at the bottom of the print byte code result, you'll find the constant pool there:

Constant pool (size = 4)

0x14c0a279fb91: [FixedArray] in OldSpace

- map: 0x2c7d20b40211 <Map(FIXED_ARRAY_TYPE)>

- length: 4

0: 0x2c7d20b49221 <String[4]: #next>

1: 0x2c7d20b45f41 <String[4]: #done>

2: 0x2c7d20b44819 <String[5]: #value>

3: 0x2c7d20b470b1 <String[6]: #return>

So, [0] is for the "next" string. Then, Star4 stores the result of GetNamedProperty in r4.

After doing some preparations and saving the context, the loop starts:

0x14c0a279fc07 @ 17 : 11 LdaTrue

0x14c0a279fc08 @ 18 : be Star6

0x14c0a279fc09 @ 19 : 5d f6 f5 06 CallProperty0 r4, r5, [6]

0x14c0a279fc0d @ 23 : ba Star10

0x14c0a279fc0e @ 24 : 9f 07 JumpIfJSReceiver [7] (0x14c0a279fc15 @ 31)

0x14c0a279fc10 @ 26 : 65 c6 00 f0 01 CallRuntime [ThrowIteratorResultNotAnObject], r10-r10

0x14c0a279fc15 @ 31 : 2d f0 01 08 GetNamedProperty r10, [1], [8]

0x14c0a279fc19 @ 35 : 96 1c JumpIfToBooleanTrue [28] (0x14c0a279fc35 @ 63)

0x14c0a279fc1b @ 37 : 2d f0 02 0a GetNamedProperty r10, [2], [10]

0x14c0a279fc1f @ 41 : ba Star10

0x14c0a279fc20 @ 42 : 12 LdaFalse

0x14c0a279fc21 @ 43 : be Star6

0x14c0a279fc22 @ 44 : 19 f0 f9 Mov r10, r1

0x14c0a279fc25 @ 47 : 19 f9 f7 Mov r1, r3

0x14c0a279fc28 @ 50 : 0b f9 Ldar r1

0x14c0a279fc2a @ 52 : 38 fa 0c Add r0, [12]

0x14c0a279fc2d @ 55 : 19 fa f0 Mov r0, r10

0x14c0a279fc30 @ 58 : c4 Star0

0x14c0a279fc31 @ 59 : 89 2a 00 0d JumpLoop [42], [0], [13] (0x14c0a279fc07 @ 17)

Let's summarize a bit:

r0stores oursumvariable;r5stores the array iterator;r4stores the "next" property.

From now on, I'll skip some commands for simplification and describe only the valuable commands for our logic.

By calling CallProperty0, we call the next method (r4) on the array iterator (r5). We move the result from the accumulator to r10 by running Star10. Then, if the result in the accumulator is not a JSReceiver, we call CallRuntime which throws an exception. Here, JumpIfJSReceiver is used to jump over CallRuntime.

Then GetNamedProperty r10, [1] (remember, r10 stores the result of the iterator.next() call) gets the named property value [1] from the constant pool (which is "done") on the object from r10. As we know from the Iterator protocol, done is a boolean where "true" values mean that the iterator has finished its work. Therefore, JumpIfToBooleanTrue checks whether the iteration is finished and if yes, it jumps out of the loop.

If the loop is not finished, it moves forward to take a value from the iterator by calling GetNamedProperty r10, [2] where [2] is "value" from the constant pool. So now we have our n variable from the JS code representation in the accumulator register. Store this value to r10. Then do some (maybe?) technical movements like moving r10 to r1, r1 to r3, and finally, r1 to the accumulator. Then Add r0 basically adds r0 (our sum) to the accumulator and then stores the value to r0 again by calling Start0.

JumpLoop [42] just jumps back by 42 bytes. As you can see, the JumpLoop relative address is 59, so 59 - 42 = 17. And at the address 17, our loop starts.

Huh! That's how our 3-line loop was converted to 19 lines of byte code. And the byte code will produce even more machine line codes, but we skip machine code.

Let's finish our method. After the JumpLoop goes some technical stuff with checks, it's not so useful for us. And finally, we see our return:

0x14c0a279fc71 @ 123 : 0b fa Ldar r0

0x14c0a279fc73 @ 125 : a9 Return

Ldar r0 simply loads r0 into the accumulator, because the Return command returns the value from the accumulator. That's it.

What can we infer from the byte code? The iteration process is quite straightforward. There are no context switches, additional closures, method calls, etc. It's just pure iteration logic in a loop. That's why it's so fast. The next stop is the byte code for the functional approach.

sumFunctional() byte code

Since we've already learned a lot about byte code, registers, and commands, it will now be much easier to understand more complex byte code.

So, here is the byte code:

[generated bytecode for function: sumFunctional (0x02c74e79d5f9 <SharedFunctionInfo sumFunctional>)]

Bytecode length: 29

Parameter count 2

Register count 4

Frame size 32

Bytecode age: 0

559 E> 0x2c74e79f71e @ 0 : 83 00 01 CreateFunctionContext [0], [1]

0x2c74e79f721 @ 3 : 1a fa PushContext r0

0x2c74e79f723 @ 5 : 10 LdaTheHole

0x2c74e79f724 @ 6 : 25 02 StaCurrentContextSlot [2]

579 S> 0x2c74e79f726 @ 8 : 0c LdaZero

579 E> 0x2c74e79f727 @ 9 : 25 02 StaCurrentContextSlot [2]

589 S> 0x2c74e79f729 @ 11 : 2d 03 01 00 GetNamedProperty a0, [1], [0]

0x2c74e79f72d @ 15 : c3 Star1

0x2c74e79f72e @ 16 : 80 02 00 02 CreateClosure [2], [0], #2

0x2c74e79f732 @ 20 : c1 Star3

589 E> 0x2c74e79f733 @ 21 : 5e f9 03 f7 02 CallProperty1 r1, a0, r3, [2]

629 S> 0x2c74e79f738 @ 26 : 16 02 LdaCurrentContextSlot [2]

640 S> 0x2c74e79f73a @ 28 : a9 Return

Constant pool (size = 3)

0x2c74e79f6c1: [FixedArray] in OldSpace

- map: 0x25f0e90c0211 <Map(FIXED_ARRAY_TYPE)>

- length: 3

0: 0x02c74e79f611 <ScopeInfo FUNCTION_SCOPE>

1: 0x33eb42f83229 <String[7]: #forEach>

2: 0x02c74e79f671 <SharedFunctionInfo>

Handler Table (size = 0)

Source Position Table (size = 18)

0x02c74e79f741 <ByteArray[18]>

[generated bytecode for function: (0x02c74e79f671 <SharedFunctionInfo>)]

Bytecode length: 21

Parameter count 2

Register count 2

Frame size 16

Bytecode age: 0

610 S> 0x2c74e79f84e @ 0 : 16 02 LdaCurrentContextSlot [2]

0x2c74e79f850 @ 2 : aa 00 ThrowReferenceErrorIfHole [0]

0x2c74e79f852 @ 4 : c4 Star0

0x2c74e79f853 @ 5 : 0b 03 Ldar a0

617 E> 0x2c74e79f855 @ 7 : 38 fa 00 Add r0, [0]

0x2c74e79f858 @ 10 : c3 Star1

0x2c74e79f859 @ 11 : 16 02 LdaCurrentContextSlot [2]

614 E> 0x2c74e79f85b @ 13 : aa 00 ThrowReferenceErrorIfHole [0]

0x2c74e79f85d @ 15 : 0b f9 Ldar r1

0x2c74e79f85f @ 17 : 25 02 StaCurrentContextSlot [2]

0x2c74e79f861 @ 19 : 0e LdaUndefined

622 S> 0x2c74e79f862 @ 20 : a9 Return

Constant pool (size = 1)

0x2c74e79f801: [FixedArray] in OldSpace

- map: 0x25f0e90c0211 <Map(FIXED_ARRAY_TYPE)>

- length: 1

0: 0x02c74e79d1b1 <String[3]: #sum>

Handler Table (size = 0)

Source Position Table (size = 11)

0x02c74e79f869 <ByteArray[11]>

Yes, it consists of two functions (since we call a function in forEach). The logic is a bit more complex; let's go through it step by step.

0x2c74e79f71e @ 0 : 83 00 01 CreateFunctionContext [0], [1]

0x2c74e79f721 @ 3 : 1a fa PushContext r0

Firstly, we create a new context with one slot for the function closure - CreateFunctionContext [0] [1], where [0] is the scope info index from the constant pool and [1] is the number of slots. Then, PushContext r0 stores the current context in the r0 register.

0x2c74e79f723 @ 5 : 10 LdaTheHole

0x2c74e79f724 @ 6 : 25 02 StaCurrentContextSlot [2]

0x2c74e79f726 @ 8 : 0c LdaZero

0x2c74e79f727 @ 9 : 25 02 StaCurrentContextSlot [2]

Here, some magic happens with StaCurrentContextSlot [2], which stores the object from the accumulator to context slot number 2. But before each store, it loads the hole (meaning "no value") and zero into the accumulator.

0x2c74e79f729 @ 11 : 2d 03 01 00 GetNamedProperty a0, [1], [0]

0x2c74e79f72d @ 15 : c3 Star1

GetNamedProperty a1, [1] retrieves the "forEach" value from the input array and stores it in r1.

0x2c74e79f72e @ 16 : 80 02 00 02 CreateClosure [2], [0], #2

0x2c74e79f732 @ 20 : c1 Star3

0x2c74e79f733 @ 21 : 5e f9 03 f7 02 CallProperty1 r1, a0, r3, [2]

0x2c74e79f738 @ 26 : 16 02 LdaCurrentContextSlot [2]

0x2c74e79f73a @ 28 : a9 Return

Finally, CreateClosure creates a new closure for SharedFunctionInfo (the function passed to the forEach method) at position [2] in the constant pool in scope [0]. Star3 stores the accumulator in r3. And by calling CallProperty1 r1, a0, r3, [2], it calls the function from r1 (”forEach”) on a0 (the first argument of the function) with arguments from r3 (closure). Then it loads the current context slot [2] to the accumulator (remember it was cleared twice before all the calls). And Return the accumulator value.

And now, let's quickly take a look at the closure:

0x2c74e79f84e @ 0 : 16 02 LdaCurrentContextSlot [2]

0x2c74e79f850 @ 2 : aa 00 ThrowReferenceErrorIfHole [0]

0x2c74e79f852 @ 4 : c4 Star0

0x2c74e79f853 @ 5 : 0b 03 Ldar a0

0x2c74e79f855 @ 7 : 38 fa 00 Add r0, [0]

0x2c74e79f858 @ 10 : c3 Star1

0x2c74e79f859 @ 11 : 16 02 LdaCurrentContextSlot [2]

0x2c74e79f85b @ 13 : aa 00 ThrowReferenceErrorIfHole [0]

0x2c74e79f85d @ 15 : 0b f9 Ldar r1

0x2c74e79f85f @ 17 : 25 02 StaCurrentContextSlot [2]

0x2c74e79f861 @ 19 : 0e LdaUndefined

0x2c74e79f862 @ 20 : a9 Return

Load context from slot 2 to the accumulator by calling LdaCurrentContextSlot [2]. If there is no value, throw an error. Store the context in r0. Load a0 (the current value from the array) into the accumulator. Add the r0 context to the accumulator (this is equivalent to the logic sum += n). Store the result in r1. Load the context again for technical reasons. Load r1 into the accumulator and store the accumulator in the current context in slot 2. Load undefined into the accumulator and return the value. As you can see, a void method always returns undefined.

You may ask where the iteration logic is. In short, it's in the Torque implementation of the forEach method for JS arrays. Torque is a statically typed, low-level programming language used to create efficient and maintainable implementations of complex runtime code in V8. Moreover, it has several different implementations for the forEach method. You can find the full implementation here. I'll just provide the FastArrayForEach (because its name suggests that it should be fast):

transitioning macro FastArrayForEach(implicit context: Context)(

o: JSReceiver, len: Number, callbackfn: Callable, thisArg: JSAny): JSAny

labels Bailout(Smi) {

let k: Smi = 0;

const smiLen = Cast<Smi>(len) otherwise goto Bailout(k);

const fastO = Cast<FastJSArray>(o) otherwise goto Bailout(k);

let fastOW = NewFastJSArrayWitness(fastO);

// Build a fast loop over the smi array.

for (; k < smiLen; k++) {

fastOW.Recheck() otherwise goto Bailout(k);

// Ensure that we haven't walked beyond a possibly updated length.

if (k >= fastOW.Get().length) goto Bailout(k);

const value: JSAny = fastOW.LoadElementNoHole(k)

otherwise continue;

Call(context, callbackfn, thisArg, value, k, fastOW.Get());

}

return Undefined;

}

From the Torque code, which is very similar to TypeScript, we can observe an implementation of the imperative iteration approach that is already familiar. Thus, our fancy functional approach converges with the imperative approach somewhere under the hood, albeit with some overheads.

Conclusion

In a comparison of the performance of imperative and functional approaches in Node.js, the imperative for...of operator demonstrates superior speed due (~4 times faster) to its direct iteration process. It avoids overheads such as context switches, method calls, or closures, resulting in a more efficient performance.

The functional forEach() approach, though often more readable, is slower due to the complexity in its byte code and the overhead of creating closures and additional method calls.

However, for smaller data sets, the performance difference between these two approaches is negligible, allowing developers to choose their preferred approach without significant performance implications. Thus, while the imperative approach is generally faster and should be used when performance is critical, the functional approach is still a viable option for smaller data sets.

Which one to choose is a decision for the developer, but the developer should always have a general understanding of how it works to prefer one method over another.

That's it for today, thank you for your attention. Feel free to write a comment with your favorite JS constructions, and we'll sort out their performance.